The Japan-based Sakura Checker website evaluates reviews of major online shopping sites and also scrutinizes questionable product reviews.

The website's original mission was to detect if product reviews were made by actual users or written at the behest of the companies selling them.

But now the site's operator, who goes by the handle "Yu," has begun to check for AI-generated reviews.

Yu says in April he began noticing a new type of product review. At first glance such reviews appeared to be written in natural Japanese, but Yu detected a suspicious pattern that he hadn't seen before.

Common patterns

Yu says the pattern has several characteristics.

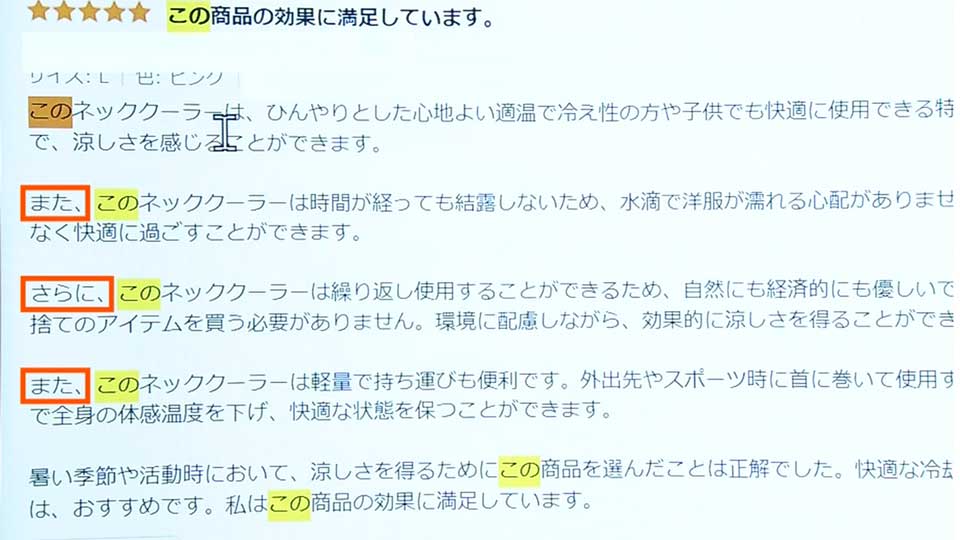

One characteristic is the repeated use of the phrase "this product."

Yu says, "Repetitive use of the same words raised a red flag for me because it's not seen in real reviews."

A second characteristic is the frequent use of conjunctions at the beginning of sentences such as "and" and "also."

A third characteristic is the review comprehensively summarizes the function and use of the product.

Yu says, "Many reviews focus on one point of the product, and whether it's good or not. But suspicious comments comprehensively summarize the product's elements in an essay-like manner. This led me to think they're written by AI."

Comparisons with AI-generated sentences

To put his suspicions to test, Yu generated reviews using ChatGPT. He told the application to write reviews of a neck cooler.

ChatGPT quickly wrote sentences such as "The product is a savior in summer!" and "I bought them recently and I was really surprised at their effectiveness."

The sentences shared the same characteristics as the reviews Yu found suspicious, such as repeated use of conjunctions and phrases such as "this product." They were also divided into very easy-to-read paragraphs.

Yu also noticed that if ChatGPT was given manufacturers' product descriptions, it would generate user reviews that similarly mentioned those details.

One suspicious product review of a motorcycle rain cover included the same points as the manufacturer's product description. It noted it was made of a material that is "XX denier" and its "water resistance exceeds XX Pascal."

Yu proceeded to give manufacturers' product explanations to ChatGPT and asked it to write reviews. The results were almost identical to suspicious reviews, using almost the same word orders.

Yu also found that AI-generated text is repeatedly used.

He noticed several pages of reviews for different manufacturers' portable air conditioners contained the same text and were posted by the same account.

This led Yu to believe someone used AI to write fictitious reviews, and then copied the same text into reviews of other products.

To discern the trend, Yu instructed AI to produce more than 1,000 reviews while changing the names of the products. He then analyzed patterns by comparing them.

He also used a tool to determine how similar sentences were. Yu found that in some cases nearly 80 percent of the sentences used in suspicious reviews were identical or similar to the comments he generated with AI.

Yu eventually created a system to ascertain the possibility of whether sentences are written by AI based on their patterns.

Yu says since April his system has flagged hundreds of reviews that are suspected of being generated by AI.

He says he'll continue to look for patterns, but notes it may become more difficult to detect them as AI grows more sophisticated.

Yu says, "I'm able to notice patterns now, but I'm concerned that AI will be able to create more authentic reviews as it evolves. The purpose of a review is to write your impression of something you've actually used. But AI can create fake user impressions and that makes it difficult to tell which reviews are genuine. This is very troubling.

Amazon's position on the use of AI

How are e-commerce websites handling the situation?

A check of Amazon's review rules found no mention of AI. When NHK queried its Japanese subsidiary, a representative said, "There are some cases but not many in which customers write reviews with the help of artificial intelligence."

Amazon Japan added that the use of AI for reviews is not banned as long as it reflects the customers' product experiences and meets the company's guidelines.

But it says it is strictly dealing with reviews that violate its guidelines, such as pretending to be someone else or plagiarizing sentences.

Who wrote these reviews?

NHK tracked down people believed to be using an account that posted suspicious reviews and attempted to interview them.

Some responded and denied using AI without going into detail, while others simply ignored our request.

Can AI reviews be detected with certainty?

We asked several experts whether there are foolproof means of telling if reviews are written by AI. All said it's difficult to reliably do so in the case of short Japanese sentences, such as those used in reviews.

OpenAI, the operator of ChatGPT, offers a free tool on its website to determine if sentences are written by AI. But it says at least 1,000 letters are needed to accurately judge text, and that the tool was primarily developed for English sentences. It says results for other languages may not be reliable.

Professor Echizen Isao of the National Institute of Informatics says, "The use of AI will continue to develop not only for writing but also for images and audio.

If everyone can access Al tools, some people will put them to malevolent use.

He adds, "It will become difficult to judge the veracity of information by viewing it alone. In the future, we will have to use other sources to verify it."