Chatbots like ChatGPT, personalized social media feeds and facial recognition software are just some of the ways that AI has become a part of daily life. But the world is struggling to keep up with the evolving technology ― a problem this summit intended to address. Almost 30 countries were represented, including China, the United States and Japan, in addition to the European Union (EU).

Since the summit, the EU has agreed to its AI Act, which is set to become the first comprehensive legislation regulating AI. But, while a final vote is expected soon, the law would only come into force in 2025 at the earliest.

High level talks are clearly just beginning, but AI is already making an impact. Several professionals explained to NHK how they are currently utilizing AI in their work.

AI in the workplace

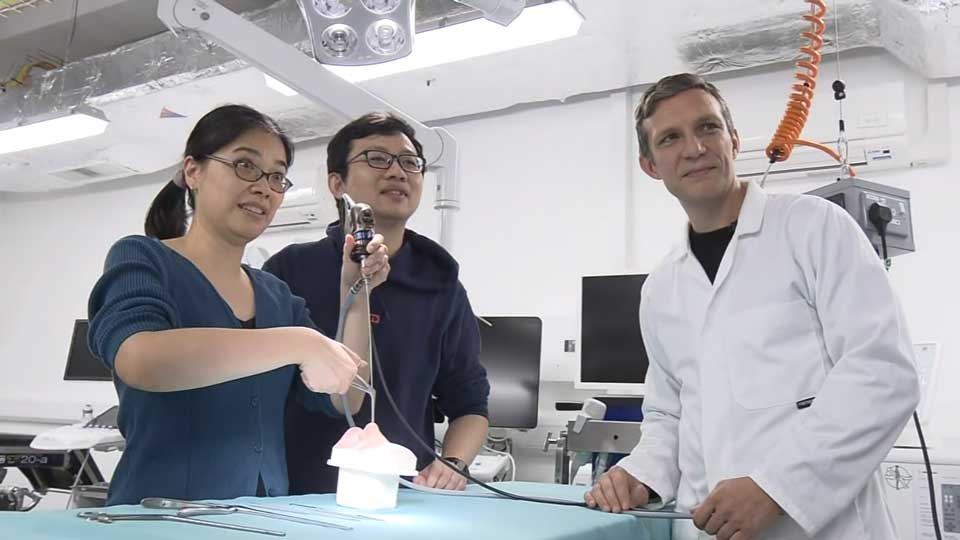

A limited number of hospitals in Britain are using AI as a warning system to improve patient safety.

The technology is helping surgeons avoid critical structures, such as key blood vessels and nerves, when performing an operation. It works by acting as a virtual guideline on top of original camera footage of the patient.

Dr Dan Stoyanov, who leads the project at University College London, said the system is both making surgery safer and helping to train junior surgeons.

But he cautioned, there are still risks due to the differences in human anatomy. "It's only by being in the clinic and being in many clinics, that we can learn more and really understand the diversity of events and structures that appear during surgical procedures," he explained.

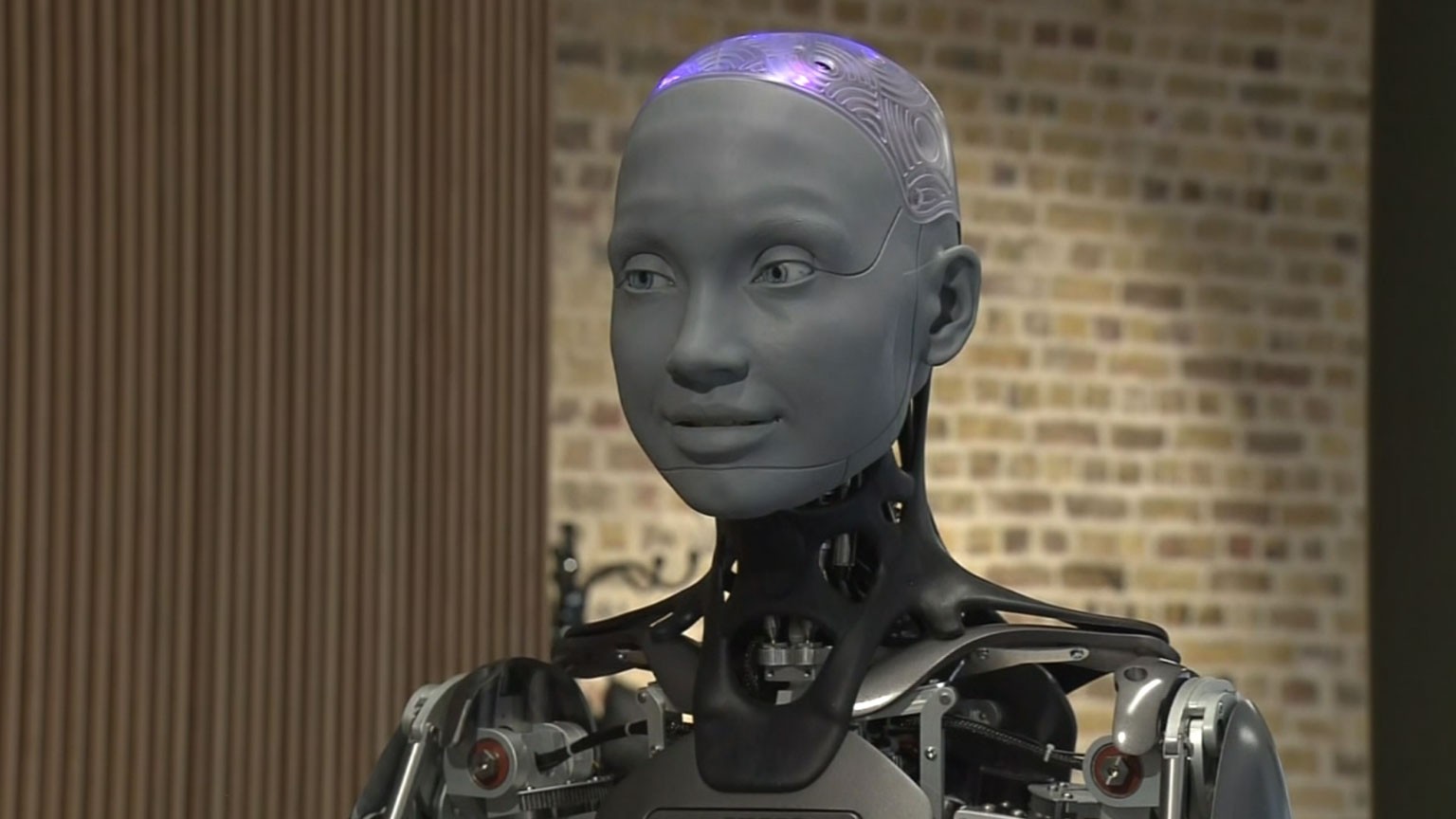

Those in the events industry are also utilizing AI. A humanoid robot called Ameca uses ChatGPT to communicate with customers.

It can interact in over 20 languages, including Japanese, English and Chinese, with relatively lifelike answers and realistic facial expressions.

When asked, "Do you think that AI could be a threat to human beings," it replied: "Only if someone programs it to be ― otherwise, I'm harmless."

Carlo Sangilles, an engineer at the company that developed Ameca, said it was designed as a tool for humans and would not take over someone's job. He said AI robots could be installed in nursing homes as companions for the elderly.

While Ameca is unable to walk and talk simultaneously, Sangilles expected this would be possible in the next 15 years. But he noted, there is uncertainty over how AI will develop.

"Godfather of AI" urges caution

The unknowns of AI development worry many experts ― even Yoshua Bengio, who is known as a "Godfather of AI."

The computer scientist attended the summit where he was appointed to produce a report into Frontier AI: next-level technology that has the potential to perform a wide range of tasks.

Bengio, a professor at the University of Montreal, explained the danger will be when AI surpasses the intelligence of humans across multiple fields. He wants governments to take urgent action.

"We have to be smart about how we can monitor the development of these systems, regulate them and trust each other," he said.

Calls to pause development

Critics claim the summit did not make enough progress in addressing the safety issues of AI.

One organization that was represented, the Future of Life Institute, shared a roadmap of measures that they want governments to take. Proposals included legally-binding safety standards and a limit to the amount of power and resources AI is permitted to use.

Executive Director Anthony Aguirre also raised concerns that AI systems could be integrated into militaries within three to five years. He strongly warned against making life or death decisions using AI.

Another group, Pause AI, protested outside the summit to demand a halt to all new AI development until regulators are given time to catch up.

Schoolkids discuss future of AI

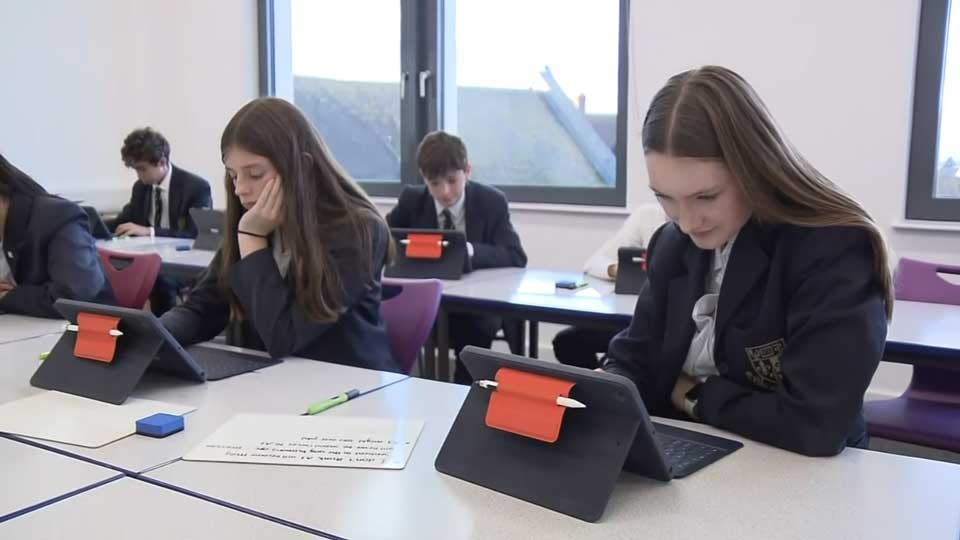

AI will most affect young people over the coming years ― in the classroom and outside of it. Schools are responding by starting to teach AI literacy.

Epsom College, a high school based outside of London, is a leader on AI. The technology features in learning platforms that the children there use to study.

Students told NHK they are both excited for the future, and aware of the risks.

One teenage boy said that if AI takes over menial jobs, people will have a chance to realize their potential by being more creative or trying a different career.

"What we have over AI is we have empathy," suggested a 12-year-old girl. "So, with really important things, where maybe people's lives are on the line, we're less likely to trust AI than ourselves because even though it's more accurate, it doesn't understand the importance of what's at stake."

Deputy head teacher Richard Alton is particularly concerned about social media and chatbots, which he finds many teenagers rely upon. The platforms have become a hotspot for misinformation such as deep-fakes that can be created with AI.

Alton urged technology companies to be held accountable. One solution, suggested by Bengio, would be a requirement for AI-generated content to come with a warning label on social media.

In the run-up to the next summit, being hosted by South Korea in mid-2024, a growing consensus is that those in power need to act now to ensure that AI is used to better the world. Until then, people will continue to wonder about AI and predict how it will next impact our lives.